Physically Realizable adversarial examples in LiDAR Object Detection

In this post, I would be reviewing the Paper Physically Realizable Adversarial Examples for LiDAR Object Detection from James Tu, Mengye Ren, Siva Manivasagam, Ming Liang, Bin Yang, Richard Du, Frank Cheng, Raquel Urtasun.

This paper was presented in the Conference on Computer Vision and Pattern Recognition (CVPR) in the year 2020.

A presentation for this paper is available here

Following are the contents that would be discussed further.

Table of Contents

1. Introduction

We live in a world where humans are an integral part of rapidly emerging technologies and innovations. Something unimaginable a few centuries ago seems today a mere possible task. Innovations in technologies continue to ease the human lifestyle and will continue to do so in the future.

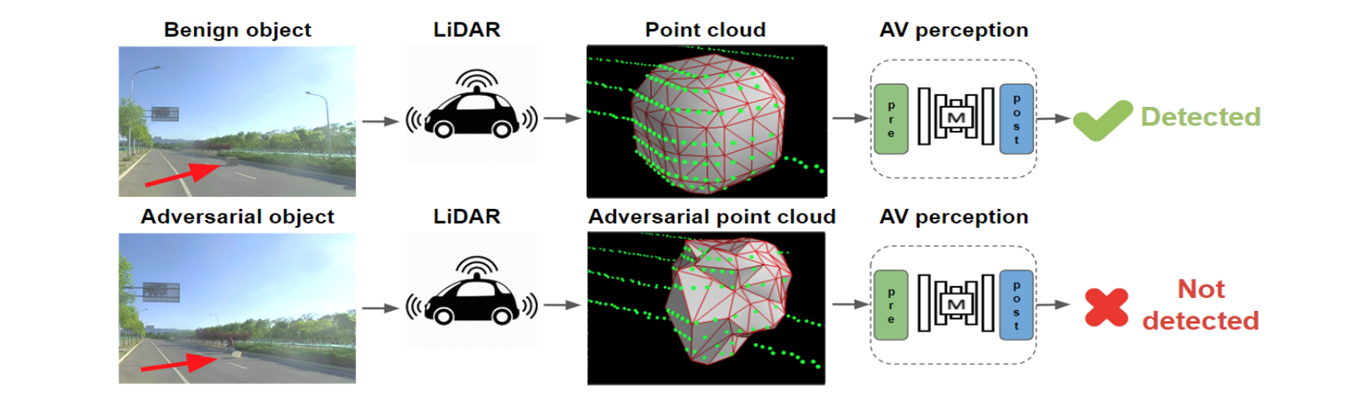

Autonomous driving rely heavily on point cloud data to process and understand the scene in front of them. However, they have been shown to susceptible to adversarial attacks which heavily impacts their accuracy. Before knowing more about various attacks, lets us understand the definition of adversarial attack.

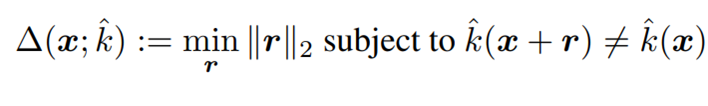

Definition : Adversarial Attacks

For a given classifier, we define an adversarial perturbation as the minimal pertubation r that is sufficient to change the estimated label k(x):

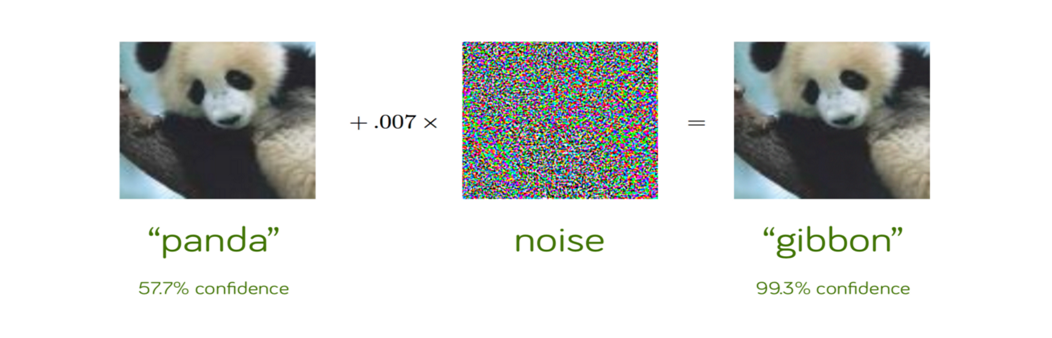

As we can see from the image, just by adding a small pertubation to the image, our machine learning model classifies a panda to be a Gibbon with a high accuracy.

Thus, we are need of machine learning models that are robust against all kinds of adversarial attacks. This is extremely crucial in Autonomous driving where safety and human life are at risk.

A brief overview is as follows:

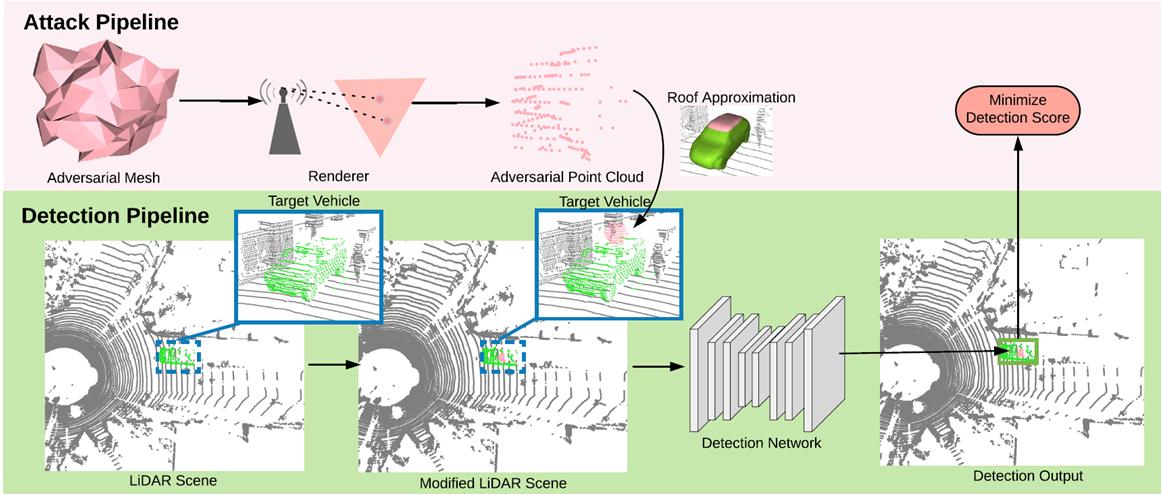

- We try to construct a physically realizable 3D mesh to placed on top of roof of a vehicle.

- We try to minimise the detection score through a loss function.

- Then evaluation is done against several target models.

- Finally, a successful defense mechanism is carried using data augmentation.

2. Related Work

Lets us discuss a couple of attacks on the state-of-art machine learning models.

A. Image based attack

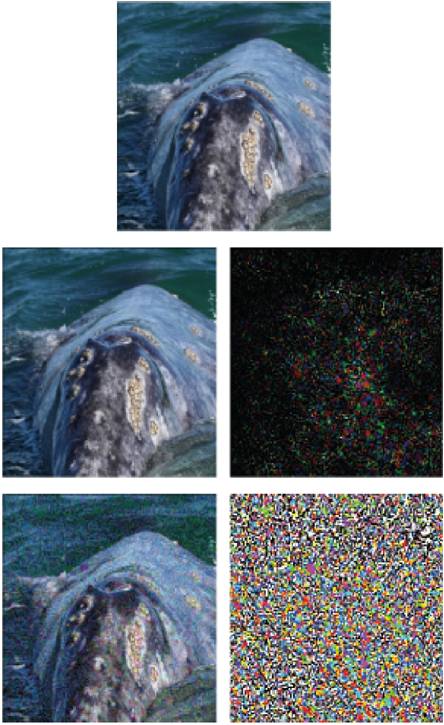

This attack was performed to misclassify an image of whale. An algorithm called DeepFool (Seyed et al. 2016) was proposed to bring about even smaller pertubation than the state-of-art pertubations. Finally the image of whale is wrongly classified as a turtle through the image-based proposed attack. We observe in the image below to achieve five times smaller pertubation on LeNet classifier that was trained on MNIST.

However, in autonomous driving scenarios we are more interested in the point cloud data as inputs, rather than 2D flat images.

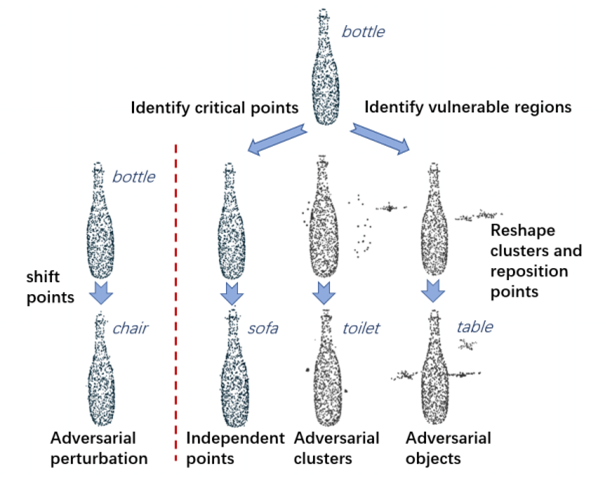

B. Point cloud attack

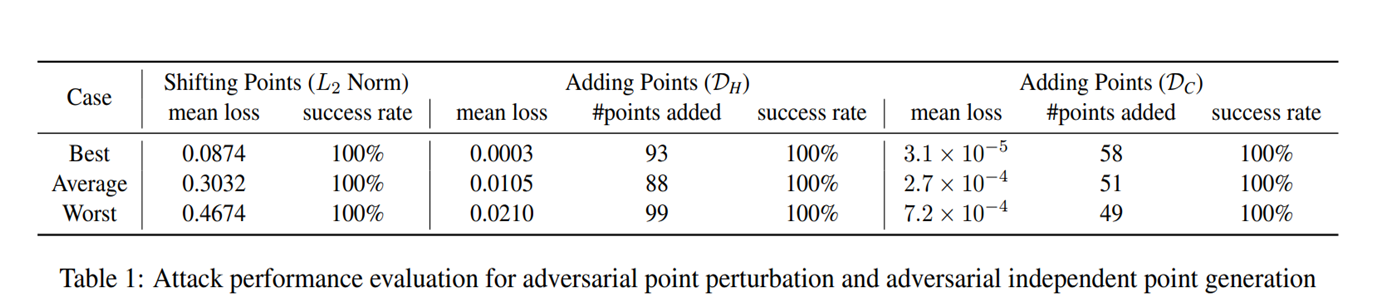

The authors (Chong Xiang et al. 2019) propesed to generate 3d adversarial point clouds. This attack was performed on the state-of-art model named PointNet that was trained on ModelNet40. The authors proposed 2 different types of attacks, namely Adversarial Point perturbation and Point generation. By randomly shifting some points or adding clusters of points to the input point clouds, the classfication of bottle changes to Chair and Toilet respectively. This shows the vulnerablility of our machine learnig models and the need for robustness.

The authors claim to achieve a success rate of more than 99% here. However, we need to re-think the nature of attacks. These attacks are actually not practical and do not happen in real-world scenarios such as in autonomous driving

C. Physical world attack

The authors (Yulong Cao et al. 2019) proposed LidarAdv which has many similarites to our appraoch.

Firstly, it learns an adversarial mesh that is placed on the road to fool the Lidar detectors in the autonomus driving vehicles.

Secondly, this is a real world attack. However, it has some key differences . Firstly it does not interact with real world objects and secondly it deals with only a single frame here.

3. Main Contributions

We basically review five major sub-topics that help us understand the attack.

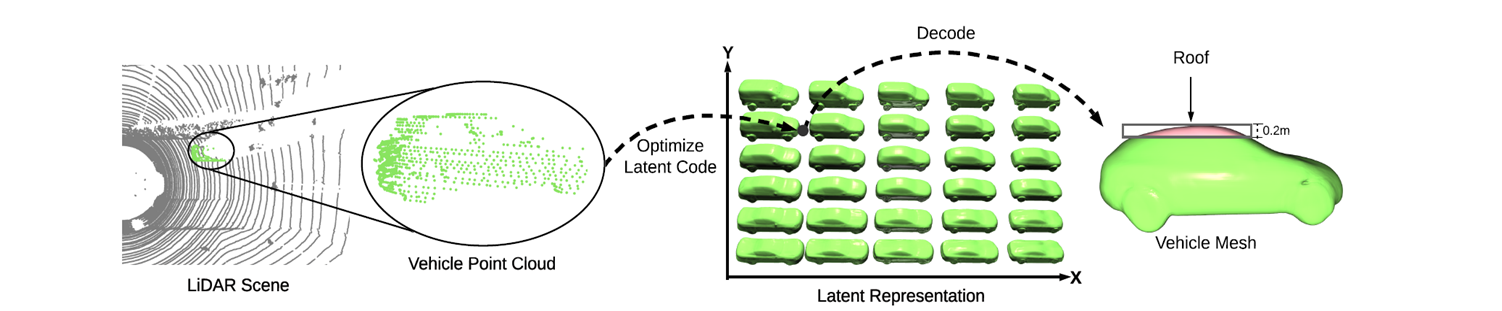

1. Surface Parametrisation

We choose to initialize our mesh with an isotropic sphere with 162 vertices and 320 faces and scale by 70cm X 70cm X 50cm. We choose mesh due to its compact representation and precise rendering.

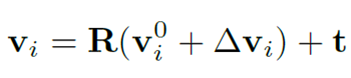

2. Lidar Point Rendering

Then we perform lidar point rendering by intersection of Gamma rays with mesh faces using Moller-Trumbore intersection algorithm . Then a union of rendered adversarial points and orginal points is applied to create a modified scene.

3. Rooftop fitting

We then located the coordinate (position) on the roof of vehicle where the mesh will be placed. We represent the vehicles as Signed distacne functions (SDF’s) and project to a latent space using the Principal Component Analysis (PCA).

Then, matching cubes algorithm is applied to obtain a fiited CAD model. Finally, we use the vertices in top 0.2m vertical range to approximate the roof-top region.

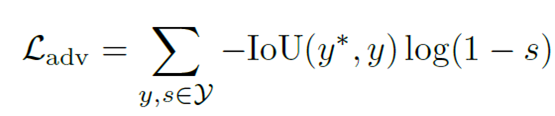

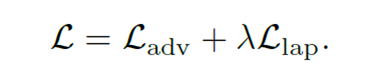

4. Loss Function

The authors proposed a combination of adversarial loss and laplacian loss. Here we care of the relevant bounding box proposals.ie;

- The confidence score must be > 0.1

- The IoU (Intersection over Union) with ground truth bounding box should be > 0.1

Our loss try to minimize the confidence of the relevant candidates. For this, binary cross entropy is taken into account with respect to the weighing factor of IoU.

Finally, the laplacian loss is used as a regulariser to maintain the surface smoothness of the mesh

5. Attack Algorithms

- White Box attcks

- Knowledge of model architectures

- Gradient of loss wrt mesh vertices

- Projected Gradient Descent

- Black box attacks

- Non-differentiable pre-processing stages

- PIXOR has occupancy voxels

- Genetic algorithm (Mutation and Crossover)

4. Experimental setup

A. Dataset

KITTI (car class only)

B. Sensor

Velodyne HDL-64E

C. Target models

1. PIXOR

2. PIXOR(d)

3. PointRCNN

4. PointPillar

5. Results

The authors proposed to use 2 different evaluation metrics to see the performance of the attacks.

1. Attack Success rate:

This metric evaluate the percentage at the target vehicle is successfully detected originally but not detected after the attack. For eg. if the ASR is 80%, this means in 80% of cases the vehicle was not detected after the attack has been made. We take a benchmark IoU greater than 0.7 to assert the detection of vehicles.

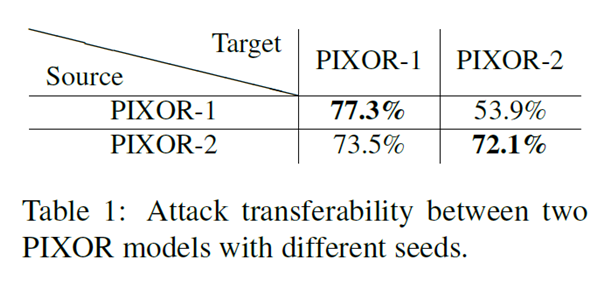

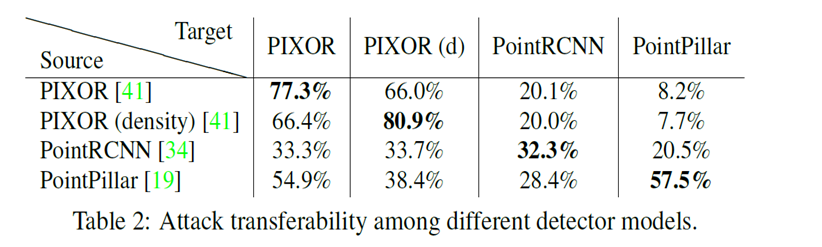

A. Attack transferability in similar models

Here two variants of PIXOR is trained using differenct seeds to learn an adversarial mesh separately. The table reflects a high degree of transferability across models with identical architecture.

B. Attack transferability across different architectures

Here attack transferabilty is performed across all 4 model architectures. We observe that attacks from PointRCNN and PointPillar used to attack PIXOR and PIXOR(d), and not vice versa. This is mainly due to the additional point-level reasoning employed in the PointRCNN and PointPillar.

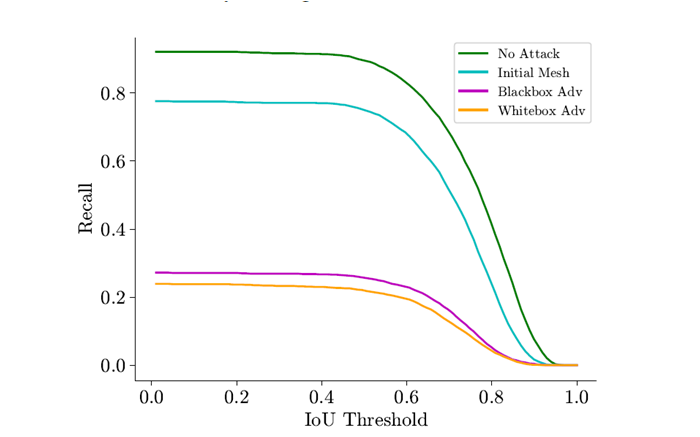

2. Recall-IoU curve:

This curve basically measures the impact of recall percentage at a range of IoU threshold.

A. Recall-IoU curve (PIXOR(d))

As seen from the curve, we plot Recall-IoU for four different attacks at various IoU thresholds. We see a drop in initial recall due to more False negatives in our detections. This can be seen in both white and black box attacks. We also observe a similar performance in both of them.

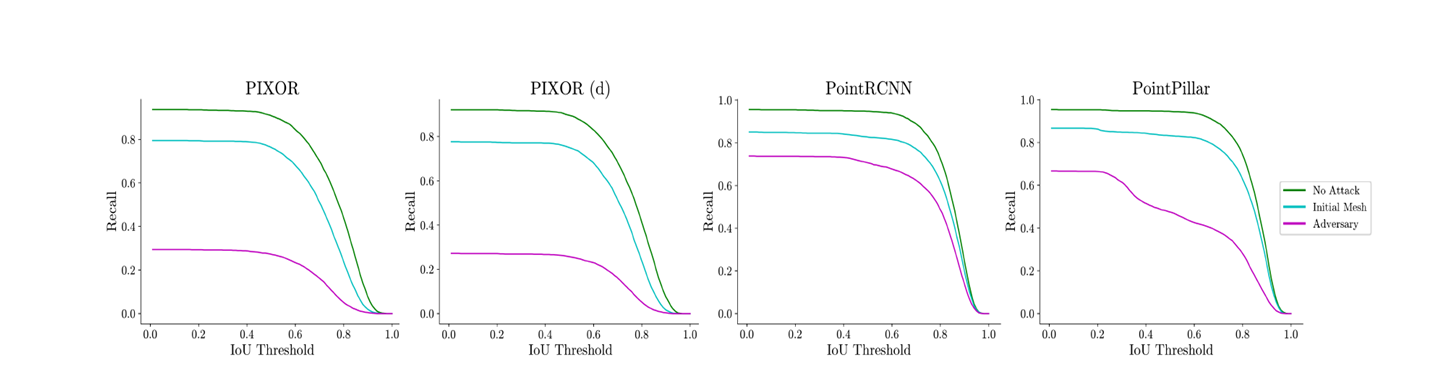

B. Recall-IoU curve (All 4 models)

We evaluate the performance on the curve against all four target models individually. We observe that PIXOR models are less robust. This is mainly due to the input representaion as they convert point cloud to voxels and remove much information. The attack seems weaker on PointRCNN as PointRCNN treats every point as a bounding box anchor. To understand the authors proposed to visualze the Attack Success rate at various location in the scene using different detector models.

Thus vehicles close to sensor register more LiDAR points making it extremely difficult to suppress all proposals. This proves the fact that PointRCNN is more robust against attack near Lidar sensor and less robust as we increase distance from lidar sensor and visualize less points subsequently.

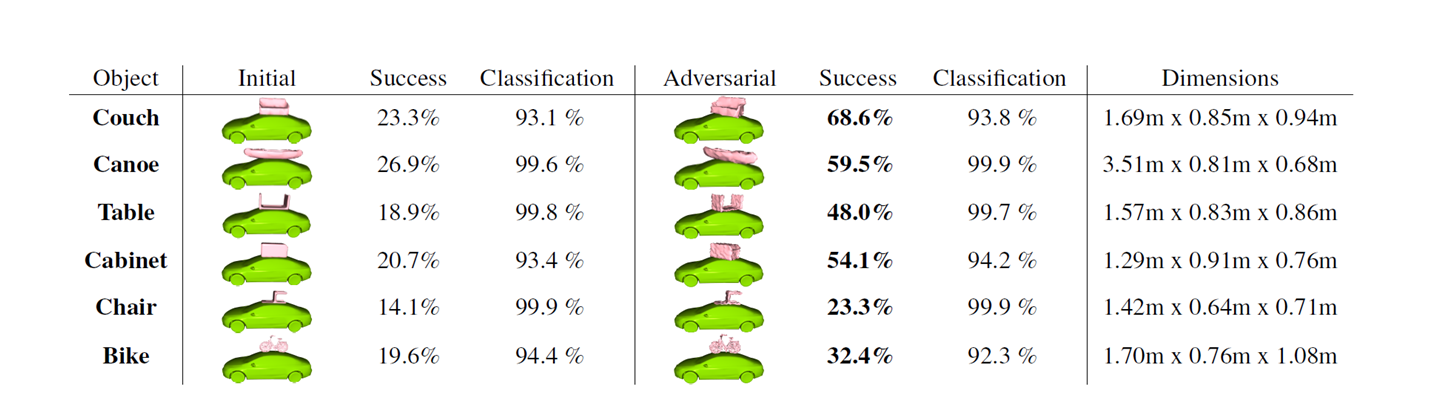

3. A realistic experiment:

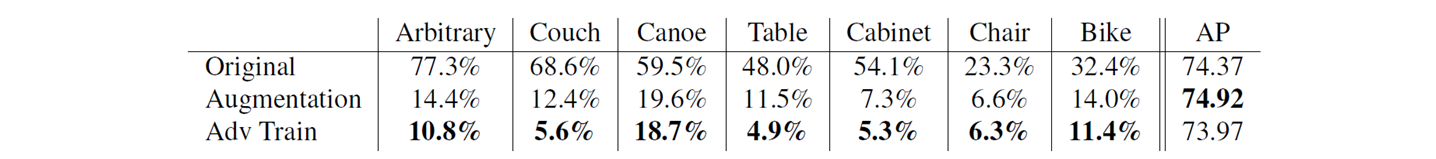

Here adversaries are learned using common objects. Six objects such as Couch,Chair,Table,Bike,Canoe, cabinet are taken from ShapeNet.

The main goal was to learn a universal adversary on account we do not pertubate much so as to resemle the commmon object. From the table we seem to achieve a significantly higher attack success rate on all the common shapes. This prover the vulnerabilities of our classifiers.

4. Defense mechanism

A defense mechanism is employed by re-training PIXOR model with random data augmentation and adversarial training.

We can observer a drop in the attack success rate percentage after augmentation and Adv. training.

6. Conclusion

The field of autonomous driving tremendously requries the need of robust and critical systems. This is pertinent when lives of people are stake. Current state-of-art are vulnearable to adverarial attacks and work in the way to improve them requires significant research.

The authors here in this paper propose a robust, universal, and physical realizable adversarial examples capable of hiding vehicles from LiDAR detectors. This achieves an attack success rate of 80% at IoU of 0.7 with a strong Lidar detector. Although our defense mechanism from Data augmentation and adversarial are strong, but are not 100% secure against adversarial attacks.

It becomes very easy for an attacker to just 3d print the mesh and place on the rooftop of a vehicle to minimise the detection score. Thus, we emphasise the need for more robust models in the context for autonomous driving.